AI for Accessibility

Role: UX/Design Technologist

Tech: React, OpenAI API, Cloudflare, Accessibility,

Year: 2023 — 2024

I have been exploring AI to solve real-world challenges, focusing on accessibility and user-centric solutions. Below are key projects where I've used cutting-edge AI technologies to enhance efficiency and inclusivity in digital environments.

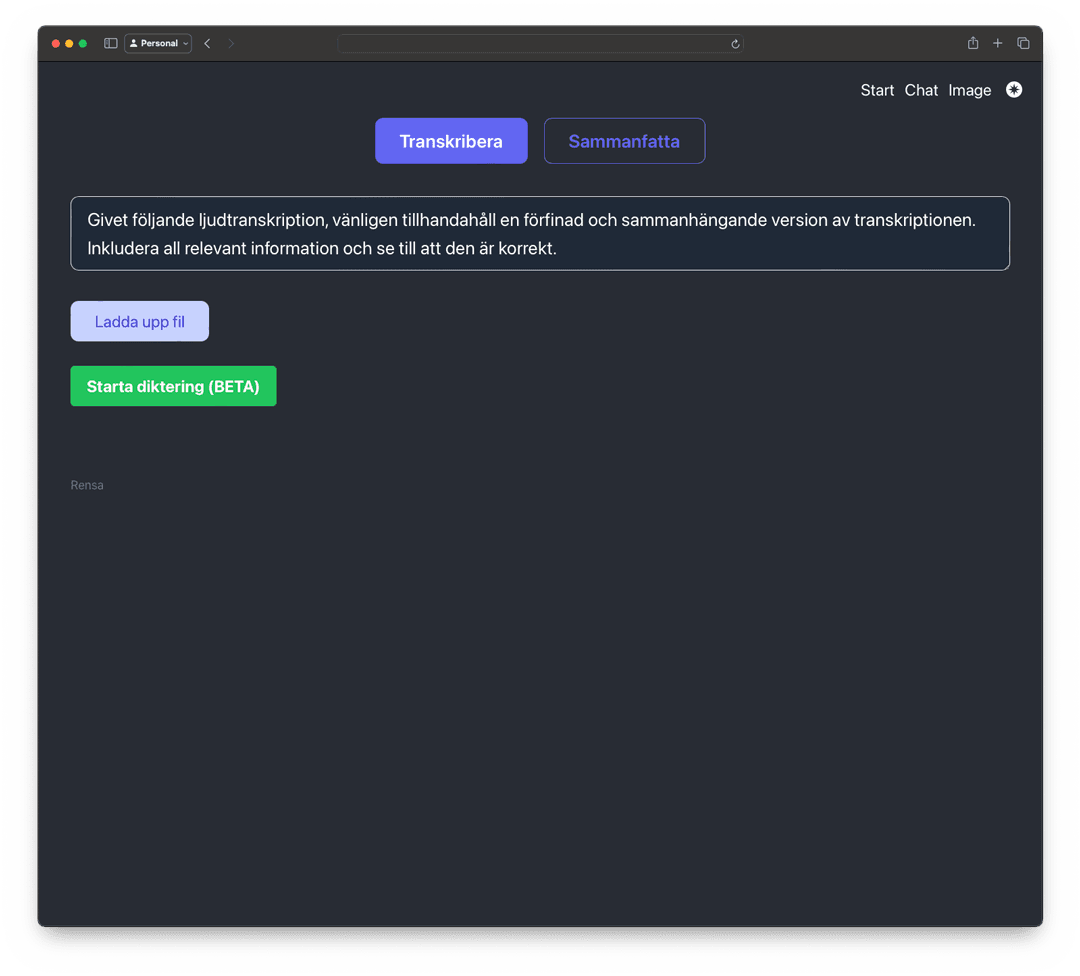

Transcription Tool Development

Tech: React, Whisper API, GPT-3.5

Background:

I identified a significant issue that needed resolution for my mother and her specific needs. She has been working as a meeting secretary, traditionally relying on pen and paper for note-taking. Her work involved creating detailed protocols from complex meetings with union representatives, necessitating accuracy and thoroughness.

Challenge:

The challenge arose when she attempted to transition to digital note-taking. This shift was cumbersome due to the inefficiency of existing dictation tools, which produced poorly structured and difficult-to-follow text.

Solution:

In the summer of 2023, I delved into AI to develop a transcription tool using Whisper API and ChatGPT 3.5. The goal was to learn more about the potential of AI but also to improve text structure and speaker identification. The prototype significantly streamlined her workflow, enhancing note-taking and protocol development efficiency.

Impact:

The tool benefited my mother by reducing her post-meeting workload and also proved useful in user testing sessions, speeding up insight gathering and presentation preparation.

Update January 2025 - Notely AI - launched:

Get perfect notes and transcriptions with AI.

Designed to be reliable, simple, private, and powerful.

https://notely.se/

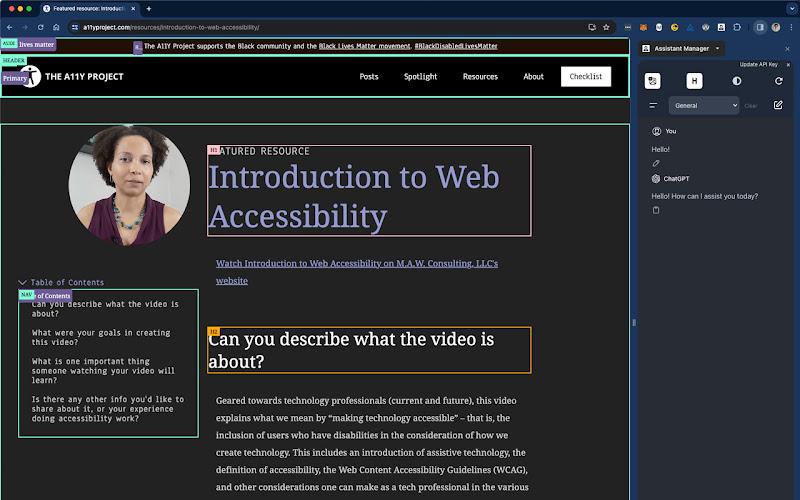

Assistant API Development

Tech: React, Assistant API, Vision API, GPT-4

Background:

Inspired by the release of GPT-4 and the new possibilities, I initiated the development of an Assistant API in the fall of 2023. Starting with a GitHub boilerplate template, I aimed to explore the capabilities of custom GPTs and their applications in enhancing content accessibility.

Challenge:

The primary challenge was to fully understand and utilize the potential of the Assistant API. GPT-4 introduced significant advancements, including a code interpreter and the ability to analyze large chunks of text and documents. This allowed for faster summarization and analysis of various file types, including PDFs and Word documents. The goal was to leverage these capabilities to create a versatile tool that could enhance multiple aspects of content management and creation.

Solution:

The solution evolved into a multifaceted application of the Assistant API:

Document Analysis: Utilizing GPT-4's ability to process and summarize large documents quickly.

Content Enhancement: Using the API to suggest improvements and additions to existing content.

Creative Assistance: Employing the API for brainstorming and generating creative content ideas.

The project culminated in the development of a Chrome extension that incorporated these features, providing a comprehensive tool for content creators and editors.

Impact:

This development has significantly streamlined various aspects of content creation, analysis, and management processes. The tool's success has sparked interest in broader applications and potential scale-up using a corporate account with OpenAI.

Transforming Visual Content into Narrated Experiences with AI

Tech: Flutter, Dart, Vision API, GPT-4

Background:

During the winter of 2023 and early 2024, I saw the potential to create a real-time vision AI to help visually impaired individuals see the world through their phones. Using Flutter and the Vision API, I developed an application that enables users to understand their surroundings. The application was able to bridge the gap between visual content and users who need accessibility features.

Challenge:

The main hurdle was to convert visual information from images into descriptive text that could be easily understood and narrated, ensuring the content was accessible to a wider audience.

Solution:

The developed application captures images for analysis by OpenAI, which then translates the visual data into descriptive text. This text is further processed using ElevenLabs' speech synthesis, incorporating a voice clone for a personalized narration experience.

Impact:

This innovative approach demonstrates the potential of AI in enhancing digital accessibility, offering a novel solution that converts visual data into narrated text, thereby improving content accessibility for individuals with visual impairments. While still in progress, the prototype highlights the transformative possibilities AI holds for accessibility enhancements.

Update May 2024 - GPT-4o

With the introduction of GPT-4o and collaboration with "Be My Eyes," this new feature will be coming soon. It's exciting that I was experimenting with similar solutions just a few months before the release.